One issue that virtualization administrators must routinely deal with is virtual machine (VM) sprawl. Microsoft's licensing policy for Windows Server 2012 Datacenter Edition, and tools such as System Center Virtual Machine Manager, have made it too easy to create VMs; if left unchecked, VMs can proliferate at a staggering rate.

The problem of VM sprawl is most often dealt with by placing limits on VM creation or setting policies to automatically expire aging virtual machines. However, it is also important to consider the impact VM sprawl can have on your storage infrastructure.

As more and more VMs are created, storage consumption can become an issue. More often however, resource contention is the bigger problem. Virtual hard disks often reside on a common volume or on a common storage pool, which means the virtual hard disks must compete for IOPS.

One of the best tools for reducing storage IOPS is file system deduplication. However, there are some important limitations that must be considered.

Microsoft introduced native file system deduplication in Windows Server 2012. Although this feature at first seemed promising, it had two major limitations: Native deduplication was not compatible with the new ReFS file system; and native deduplication was not supported for volumes containing virtual hard disks attached to a running virtual machine.

Microsoft did some more work on the deduplication feature in Windows Server 2012 R2 and now you can deduplicate a volume containing virtual hard disks that are being actively used. But there is one major caveat: This type of deduplication is only supported for virtual desktops, not virtual servers.

Managing QoS for effective storage I/O

Another tool for reducing the problem of storage I/O contention is a new Windows Server 2012 R2 feature called Quality of Service Management (formerly known as Storage QoS). This feature allows you to reserve storage IOPS for a virtual hard disk by specifying a minimum number of IOPS. IOPS occur in 8 KB increments. Similarly, you can cap a virtual hard disk's I/O operations by specifying a maximum number of allowed IOPS.

Microsoft introduced Windows Storage Spaces in Windows Server 2012 as a way of abstracting physical storage into a pool of storage resources. You can create virtual disks on top of a storage pool without having to worry about physical storage allocations.

Microsoft expanded the Windows Storage Spaces feature in Windows Server 2012 R2 by introducing new features such as three-way mirroring and storage tiering. You can implement the tiered storage feature on a per-virtual-hard-disk basis and allow "hot blocks" to be dynamically moved to a solid-state drive (SSD)-based storage tier so they can be read with the best possible efficiency.

The tiered storage feature greatly improves VM performance, but there are some limitations. The most pressing one is that storage tiers can only be used with mirrored virtual hard disks or simple virtual disks. Storage tiers cannot be used with parity disks, even though this was allowed in the preview release.

If you are planning to use tiered storage with a mirrored volume, then Windows requires the number of SSDs in the storage pool to match the number of mirrored disks. For example, if you are creating a three-way mirror then you will need three SSDs.

ReFS limitations

In Windows Server 2012, Microsoft introduced the Resilient File System (ReFS) as a next-generation replacement for the aging NTFS file system, which also exists in Windows Server 2012 R2. Hyper-V administrators must consider whether to provision VMs with ReFS volumes or NTFS volumes.

If you are running Hyper-V on Windows Server 2012, then it is best to avoid using the ReFS file system, which has a number of limitations. Perhaps the most significant of these (at least for virtualization administrators) is that ReFS is not supported for use with Cluster Shared Volumes.

In Windows Server 2012 R2, Microsoft supports the use of ReFS on Cluster Shared Volumes, but there are still limitations that need to be taken into account. First, choosing a file system is a semi-permanent operation. There is no option to convert a volume from NTFS to ReFS or vice versa.

Also, a number of features that exist in NTFS do not exist in ReFS. Microsoft has hinted that such features might be added in the future, but for right now, here is a list of what is missing:

File-based compression (deduplication)

Disk quotas

Object identifiers

Encrypted File System

Named streams

Transactions

Hard links

Extended Attributes

With so many features missing, why would anyone use ReFS? There are two reasons: ReFS is really good at maintaining data integrity and preventing bit rot, and it is a good choice when large quantities of data need to be stored. The file system has a theoretical size limit of 1 yottabyte.

If you do decide to use the ReFS file system on a volume containing Hyper-V VHD or VHDX files, then you will have to disable the integrity bit for those virtual hard disks. Hyper-V automatically disables the integrity bit for any newly created virtual hard disks, but if there are any virtual hard disks that were created on an NTFS volume and then moved to an ReFS volume, the integrity bit for those virtual hard disks need to be disabled manually. Otherwise, Hyper-V will display a series of error messages when you attempt to start the VM.

You can only disable the integrity bit through PowerShell. You can verify the status of the integrity bit by using the following command:

Get-Item <virtual hard disk name> | Get-FileIntegrity

If you need to disable the integrity bit, do so with this command:

Hyper-V is extremely flexible with regard to the types of storage hardware that can be used. It supports direct-attached storage, iSCSI, Fibre Channel (FC), virtual FC and more. However, the way that storage connectivity is established can impact storage performance, as well as your ability to back up your data.

There is an old saying, "Just because you can do something doesn't necessarily mean that you should." In the world of Hyper-V, this applies especially well to the use of pass-through disks. Pass-through disks allow Hyper-V VMs to be configured to connect directly to physical disks rather than using a virtual hard disk.

The problem with using pass-through disks is that they are invisible to the Hyper-V VSS Writer. This means backup applications that rely on the Hyper-V VSS Writer are unable to make file, folder or application-consistent backups of volumes residing on pass-through disks without forcing the VM into a saved state. It is worth noting that this limitation does not apply to virtual FC connectivity.

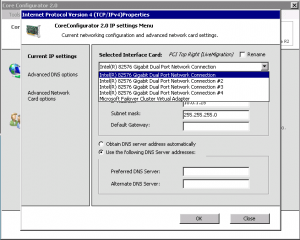

Another Hyper-V storage best practice for connectivity is that whenever possible, establish iSCSI connectivity from the host operating system rather than doing it inside the VM. The reason for this is that depending on a number of factors (such as the Hyper-V version, the guest operating system and the Integration Service usage), storage performance can suffer if iSCSI connectivity is initiated from within the VM due to a lack of support for jumbo frames.